由于现在很多网站都有反爬虫机制,同一个ip不能频繁访问同一个网站,这就使得我们在进行大量数据爬取时需要使用代理进行伪装,本博客给出几个免费ip代理获取网站爬取ip代理的代码,可以嵌入到不同的爬虫程序中去,已经亲自测试有用。需要的可以拿去使用(本人也是参考其他人爬虫程序实现的,但是忘记原地址了)。

# coding=utf-8

import urllib2

import re

proxy_list = []

total_proxy = 0

def get_proxy_ip():

global proxy_list

global total_proxy

request_list = []

headers = {

'Host': 'www.xicidaili.com',

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)',

'Accept': r'application/json, text/javascript, */*; q=0.01',

'Referer': r'http://www.xicidaili.com/',

}

for i in range(3, 11):

request_item = "http://www.xicidaili.com/nn/" + str(i)

request_list.append(request_item)

for req_id in request_list:

req = urllib2.Request(req_id, headers=headers)

response = urllib2.urlopen(req)

html = response.read().decode('utf-8')

ip_list = re.findall(r'\d+\.\d+\.\d+\.\d+', html)

port_list = re.findall(r'<td>\d+</td>', html)

for i in range(len(ip_list)):

total_proxy += 1

ip = ip_list[i]

port = re.sub(r'<td>|</td>', '', port_list[i])

proxy = '%s:%s' % (ip, port)

proxy_list.append(proxy)

return proxy_list

def get_proxy_ip1():

global proxy_list

global total_proxy

request_list = []

headers = {

'Host': 'www.kuaidaili.com',

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)',

'Accept': r'application/json, text/javascript, */*; q=0.01',

'Referer': r'www.kuaidaili.com/',

}

for i in range(1, 10):

request_item = "https://www.kuaidaili.com/free/inha/" + str(i)+"/"

request_list.append(request_item)

for req_id in request_list:

req = urllib2.Request(req_id, headers=headers)

response = urllib2.urlopen(req)

html = response.read().decode('utf-8')

ip_list = re.findall(r'\d+\.\d+\.\d+\.\d+', html)

port_list = re.findall(r'<td data-title="PORT">\d+</td>', html)

for i in range(len(ip_list)):

total_proxy += 1

ip = ip_list[i]

port = re.findall(r'\d+', port_list[i])[0]

proxy = '%s:%s' % (ip, port)

proxy_list.append(proxy)

return proxy_list

def get_proxy_ip2():

global proxy_list

global total_proxy

request_list = []

headers = {

'Host': 'www.ip3366.net',

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)',

'Accept': r'application/json, text/javascript, */*; q=0.01',

'Referer': r'www.ip3366.net/',

}

for i in range(1, 10):

request_item = "http://www.ip3366.net/?stype=1&page=" + str(i)

request_list.append(request_item)

for req_id in request_list:

req = urllib2.Request(req_id, headers=headers)

response = urllib2.urlopen(req)

html = response.read()

ip_list = re.findall(r'\d+\.\d+\.\d+\.\d+', html)

port_list = re.findall(r'<td>\d+</td>', html)

for i in range(len(ip_list)):

total_proxy += 1

ip = ip_list[i]

port = re.sub(r'<td>|</td>', '', port_list[i])

proxy = '%s:%s' % (ip, port)

proxy_list.append(proxy)

return proxy_list

if __name__=="__main__":

get_proxy_ip()

# get_proxy_ip1()

get_proxy_ip2()

print("获取ip数量为:" + total_proxy)

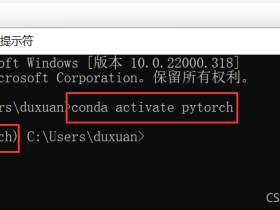

获取结果:

使用代理访问的方法:

proxy_ip = random.choice(proxy_list)

user_agent = random.choice(user_agent_list)

print proxy_ip

print user_agent

proxy_support = urllib2.ProxyHandler({'http': proxy_ip})

opener = urllib2.build_opener(proxy_support, urllib2.HTTPHandler)

urllib2.install_opener(opener)

req = urllib2.Request(url)

req.add_header("User-Agent", user_agent)

c = urllib2.urlopen(req, timeout=10)

文章末尾固定信息

我的微信

我的微信

一个码农、工程狮、集能量和智慧于一身的、DIY高手、小伙伴er很多的、80后奶爸。

服务器0元试用,首购低至0.9元/月起

服务器0元试用,首购低至0.9元/月起

评论